In developing with Spark, you would want to try some queries or instructions and get results immediately to check if your idea is working or not. Sometimes, just trying to run the code would be faster than look through the reference manual.

Scala Worksheet is a REPL(Read-Eval-Print Loop) on text editor in an IDE(integrated development environment) such as Scala IDE (on Eclipse) and IntelliJ IDEA. You can try commands, functions, object definitions, and methods on Scala Worksheet(.sc) file, and you will get the results when you save that file. Since Scala worksheet runs on IDE, you can get help such as code completion.

In this post, a template to run Spark on Scala Worksheet is presented. The template was made to be general as possible.

Worksheet Template

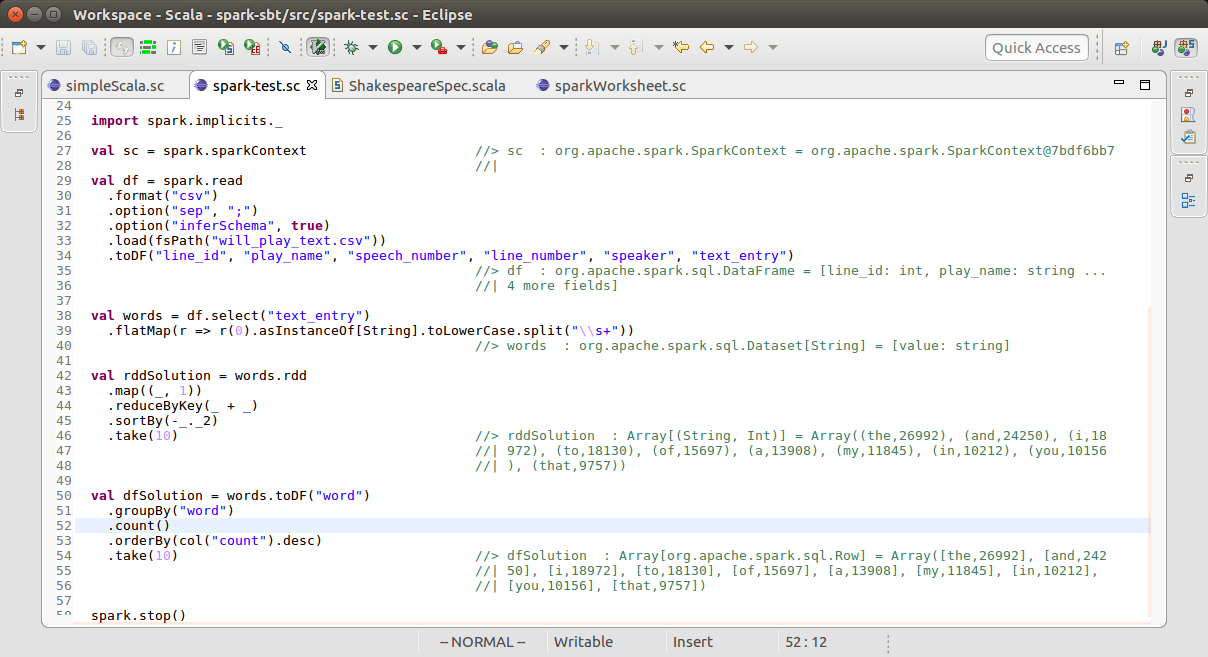

If you copy-and-paste the following code to your Scala worksheet, you can interact with local Spark system immediately. The following code is for Spark 2.x.

1 | import org.apache.spark.sql.SparkSession |

In line 7 and 8, suppress Spark log messages to simplify start up messages and resultant data. This also avoids “Output exceeds cutoff limit” error by reducing log messages.

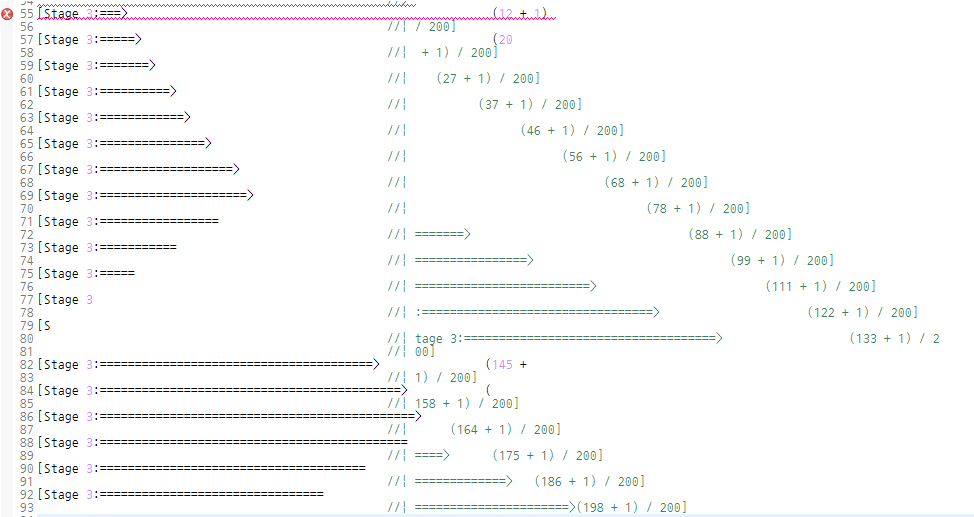

In line 13, suppress progress bar graph which is intuitive in terminal environment. Without this, the result part would be messed up like as follows:

You may increase the number of threads which run Spark by setting .master("local[2]") or .master("local[*]") in line 11. The details are in Spark programming guides.

Troubleshooting

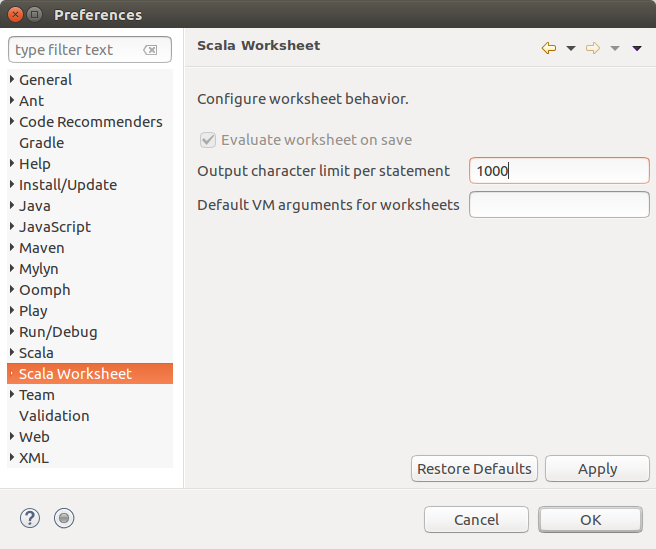

“Output exceeds cutoff limit”

This is caused by the limit of output buffer. You can expand the buffer size by changing IDE configuration.

- Scala IDE (Eclipse) In the Windows menu, select Preference, then you will get the Preference dialog. Select Scala Worksheet in Preference dialog, then you can change the Output character limit per statement.

- IntelliJ File -> Settings… -> Languages and Frameworks -> Scala -> “Worksheet” tab -> “Output cutoff limit, lines” option

Character encoding (Spark IDE, Eclipse)

The characters in some results may broken if your text are composed of not only ASCII characters, especially East Asian environment, such as Korean, Japanese, and Chinese. This problem may occurs in Microsoft Windows environment whose default character encoding is not UTF-8.

- Set JVM output character set as UTF-8

Add the following line in

eclipse.ini:1

-Dfile.encoding=UTF8

- Set editor encoding as UTF-8 In Preferences dialog, select General > Workspace. If you scroll down the contents of Workspace preferences you can get Text file encoding area. Set the text file encoding as UTF-8.

Working Examples

(Update: 2016-07-03)

You can get the working examples of Scala worksheet that runs word count Spark code at https://github.com/sanori/spark-sbt.

References

- stackoverflow: Spark ouput log style vs progress-style

- stackoverflow: Eclipse output exceeds cutoff limit in scala worksheet

- stackoverflow: IntelliJ output exceeds cutoff limit in scala worksheet

- stackoverflow: How to stop messages displaying on spark console?

- stackoverflow: Results encoding in Scala Worksheet Eclipse plugin